The Compound Effect: From One Agent to an AI Organization

By Conny Lazo

Builder of AI orchestras. Project Manager. Shipping things with agents.

Every agent wakes up brain-dead.

That's the brutal truth nobody talks about. Your Opus, your Sonnet, your carefully crafted prompts — they remember nothing from yesterday. Every session is day one.

Until you fix memory, you're just burning tokens on repeat conversations.

Here's how I built continuity into my AI organization. And why it changes everything.

Memory Is Everything

I learned this the hard way. My first Content Pipeline Orchestra kept asking me the same questions. "What's my voice?" "Who's the target audience?" "What topics should I avoid?"

Every. Single. Session.

I was training the same agent multiple times a day. Massive waste.

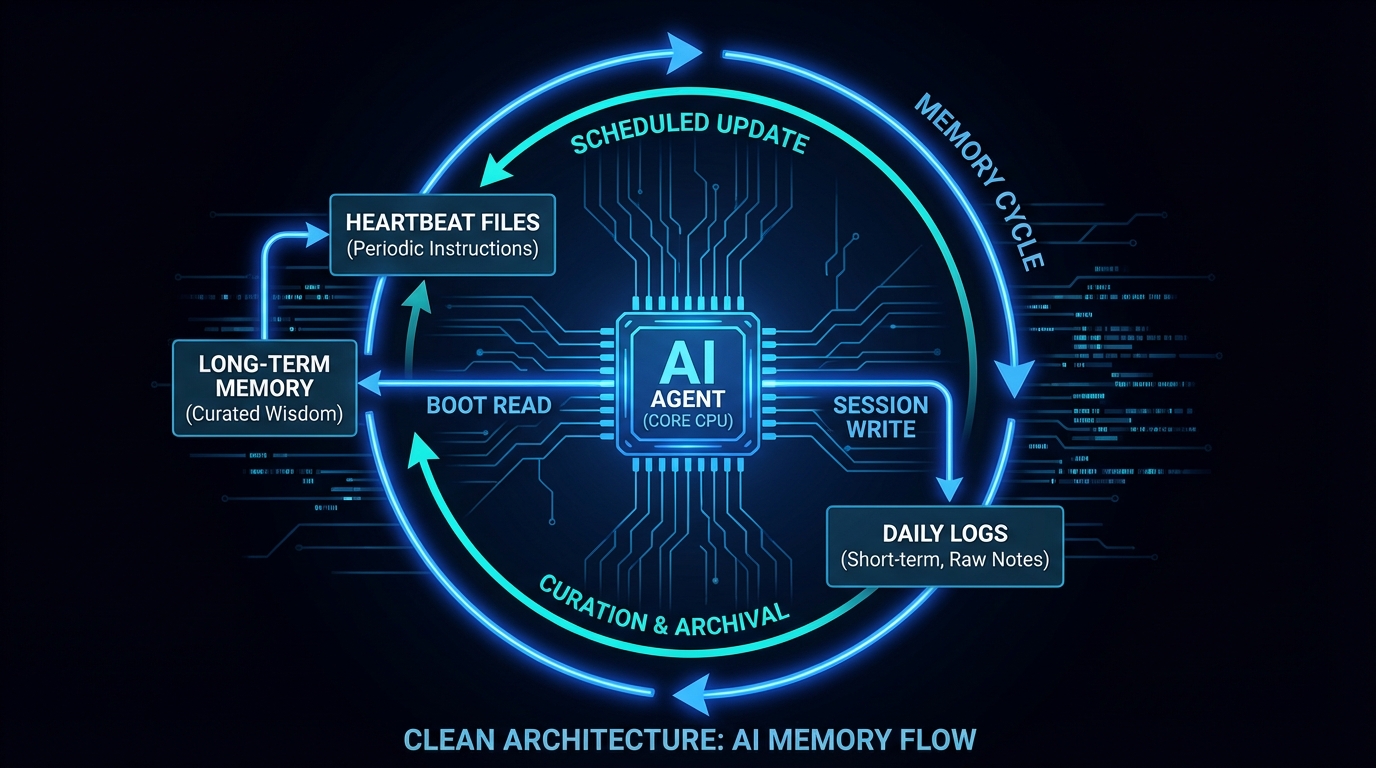

So I built a memory system:

- Daily logs: Raw notes of what happened (

memory/2026-02-16.md) - Long-term memory: Curated wisdom and decisions (

MEMORY.md) - Heartbeat files: Periodic check-in instructions (

HEARTBEAT.md)

Now every agent reads its memory on boot. Toscan, my main orchestrator, knows my writing style, project history, and decision patterns. No repetition. No retraining.

The result? My agents get smarter over time instead of starting fresh.

Architectural Constraints That Matter

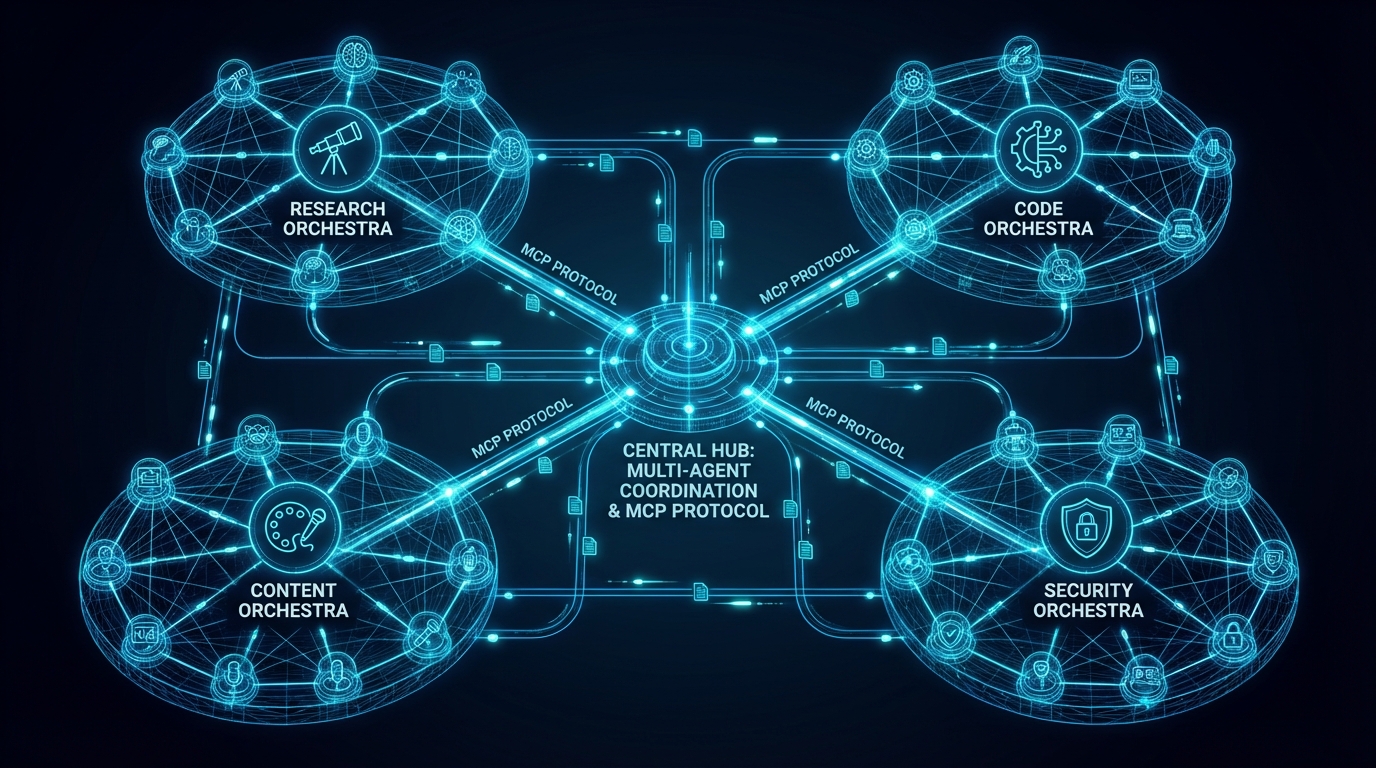

Here's a constraint that saved me from chaos: sub-agents can't spawn sub-agents.

Think about it. If every agent could spawn more agents, you'd get exponential explosion. One research task becomes 50 agents. Your token budget dies. Your attention fractures.

Instead, I built a hub-and-spoke model:

- One orchestrator per workflow

- Sub-agents report back to their orchestrator

- Orchestrators can talk to other orchestrators

- Clean hierarchy, clear responsibility

My Security Audit Orchestra spawned 4 Sonnet agents to scan repositories in parallel. Each Sonnet reported back to the Opus orchestrator. No sub-sub-agents. No chaos.

Labels track everything. Sessions provide isolation. Results flow back cleanly.

Monitoring: You Can't Optimize What You Can't See

I'm running 6 different orchestras simultaneously. How do I know what's working?

I built monitoring into everything:

- Token usage tracking: Know exactly where your budget goes

- Session status dashboards: See which agents are active, blocked, or failed

- Cost monitoring: Even "free" subscriptions have usage patterns worth understanding

My dashboard shows:

- 47 active sessions this week

- $0 token costs (subscription covers everything)

- 89% success rate on automated tasks

- Average task completion: 12 minutes

Without monitoring, orchestration is just expensive chaos.

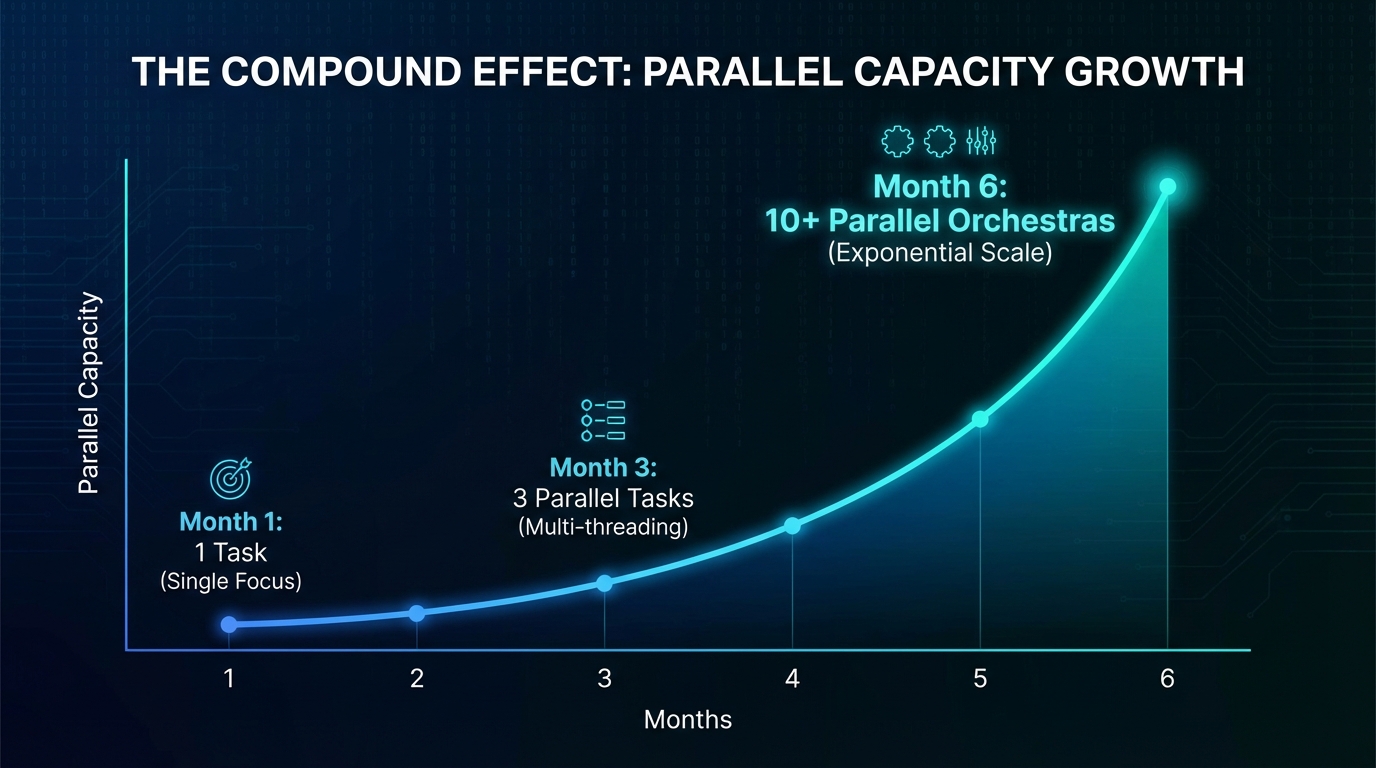

The Scaling Story

Let me show you the compound effect in practice.

Month 1: One Opus agent doing everything

- Research: 4 hours manual work

- Writing: 6 hours of back-and-forth

- Code reviews: 2 hours of context switching

Month 3: Added Sonnet workers for parallelism

- Research: 15 minutes (fan-out to 5 workers)

- Writing: 45 minutes (research already done)

- Code reviews: 20 minutes (parallel analysis)

Month 6: Added Haiku for cost optimization

- File operations: Free (Haiku handles everything)

- Git commands: Automated (no more manual branches)

- Housekeeping: Invisible (PRs appear like magic)

Today: Different orchestras for different purposes

- Research Orchestra: Handles all competitive analysis

- Code Shipping Orchestra: Manages feature development

- Content Pipeline Orchestra: Produces this article series

- Security Audit Orchestra: Monitors all repositories

- Infrastructure Orchestra: Maintains deployments

- Evy's Jeannie Orchestra: Isolated system for my wife

Each orchestra makes me faster. The time savings compound. More orchestras = more parallel work capacity.

The 10x Effect

Here's what compound means in practice:

- Week 1: Manual research → 4 hours

- Week 4: Research orchestra → 15 minutes while I work on something else

- Week 8: Research + writing + code shipping all running simultaneously

I'm not 10x faster at individual tasks. I'm 10x faster because I can run 10 tasks in parallel.

Last month I shipped:

- FidelTrad translation improvements

- The Door portfolio redesign

- CPMS architecture documentation

- This LinkedIn article series

- Security audits across 4 repositories

All while maintaining my day job as a Project Manager.

The orchestras run while I sleep. I wake up to completed research, drafted content, and deployed features.

Cross-Agent Communication

The next frontier is agents talking to agents.

Right now, my orchestras communicate through me. The Research Orchestra delivers a brief. I feed it to the Content Pipeline Orchestra. Manual handoff.

I'm building direct agent-to-agent protocols:

- Standardized message formats

- Secure communication channels

- Result validation between agents

Imagine: Research Orchestra discovers a new trend. Automatically triggers Content Pipeline Orchestra to draft an article. Security Orchestra detects a vulnerability. Automatically creates Infrastructure Orchestra tickets.

No human in the loop. Pure orchestration.

What's Coming

Deloitte predicts 50% enterprise adoption of multi-agent systems by 2026. We're already there.

The future I'm building:

- MCP standardization: Universal protocols for agent communication

- Persistent specialized agents: Agents that live across sessions, accumulating expertise

- Self-improving prompts: Agents that optimize their own instructions based on outcomes

- Cross-platform orchestration: Agents working across Claude, GPT, Gemini simultaneously

I'm not waiting for vendors to build this. I'm building it now.

Memory Engineering Standards

The research is clear: AI-native memory systems outperform traditional databases for agent contexts.

Ajith's work on memory engineering shows that structured memory files beat vector databases for most orchestration use cases. Simpler. Faster. More reliable.

My memory system:

- Flat files over databases

- Human-readable formats

- Version controlled

- Agent-writable

Every decision gets logged. Every lesson gets preserved. Every pattern gets documented.

My agents learn from history instead of repeating it.

The Cost of Not Scaling

McKinsey's research on "one year of agentic AI" shows a clear pattern: teams that embrace orchestration early build sustainable competitive advantages. Teams that don't fall behind permanently.

The choice isn't whether to build orchestration. It's whether to build it before or after your competitors.

I chose before.

Start Your Compound Effect

You already have the tools. Claude has sub-agent spawning. OpenClaw has memory systems. The patterns exist.

Your compound effect starts with your first orchestra.

Build it this weekend. Start simple: one conductor, one worker. Add memory systems. Monitor everything.

Next weekend, build your second orchestra. Then your third.

In 6 months, you'll have an AI organization working while you sleep.

The question isn't whether this future will happen. It's whether you'll be building it or watching others build it.

I choose building.

What do you choose?

Sources & Inspiration

- AI-Native Memory: Persistent Agents — Memory engineering standards, indexing, and access controls

- Deloitte AI Agent Orchestration — 50% enterprise adoption prediction by 2026

- McKinsey Agentic AI Lessons — One year of practical agentic AI experience

- 7 Agentic AI Trends 2026 — 2026 as inflection point for scaling agentic systems

- Multi-Agent Systems 2026 — From individual agents to orchestration

- AI Agent Memory Security — Security challenges with persistent memory systems

- Future AI Agents Trends — More autonomous, complex workflows without human oversight

- Scaling AI Agents via Contextual Intelligence — Contextual intelligence for agent scaling

Previously in this series:

More from "Build Your Own Orchestra"

Setting Up Your Stage: Infrastructure for AI Agent Orchestration

Your agents need their own home. Here's how I built mine.

Choosing Your Conductor: The AI Engine That Runs Your Orchestra

Your model choice makes or breaks everything. Here's what actually works.

Building the Stage: Platforms and Tools for AI Orchestration

The platform you choose determines what's possible. Here's what actually works.

Your First Orchestra: From Solo Act to Multi-Agent Symphony

Stop drowning in single-agent chaos. Here's how I built my first multi-agent workflow that saved me 4 hours a day.