The Translation Test: Why I Built What Others Just Theorize About

By Conny Lazo

Builder of AI orchestras. Project Manager. Shipping things with agents.

Code is about to cost nothing, and knowing what to build is about to cost you everything.

That's how Nate B. Jones opened his latest video, and it hit me like a lightning bolt. Not because the idea was new—I've been living this reality for months. But because Nate articulated something I'd experienced firsthand without fully understanding its implications.

Six months ago, I built FidelTrad, an AI-powered book translation tool. Not because I'm a linguist or because I saw a market opportunity, but because I had a specific problem: I wrote a book in English and wanted to translate it into French, German, Portuguese, and Spanish. Getting quotes from human translators? Per language, per book — it would have cost me my eyeballs. We're talking thousands per language, months of back-and-forth, and no guarantee they'd nail the nuance.

What happened next changed how I think about the future of work entirely.

The Translation Reality Check

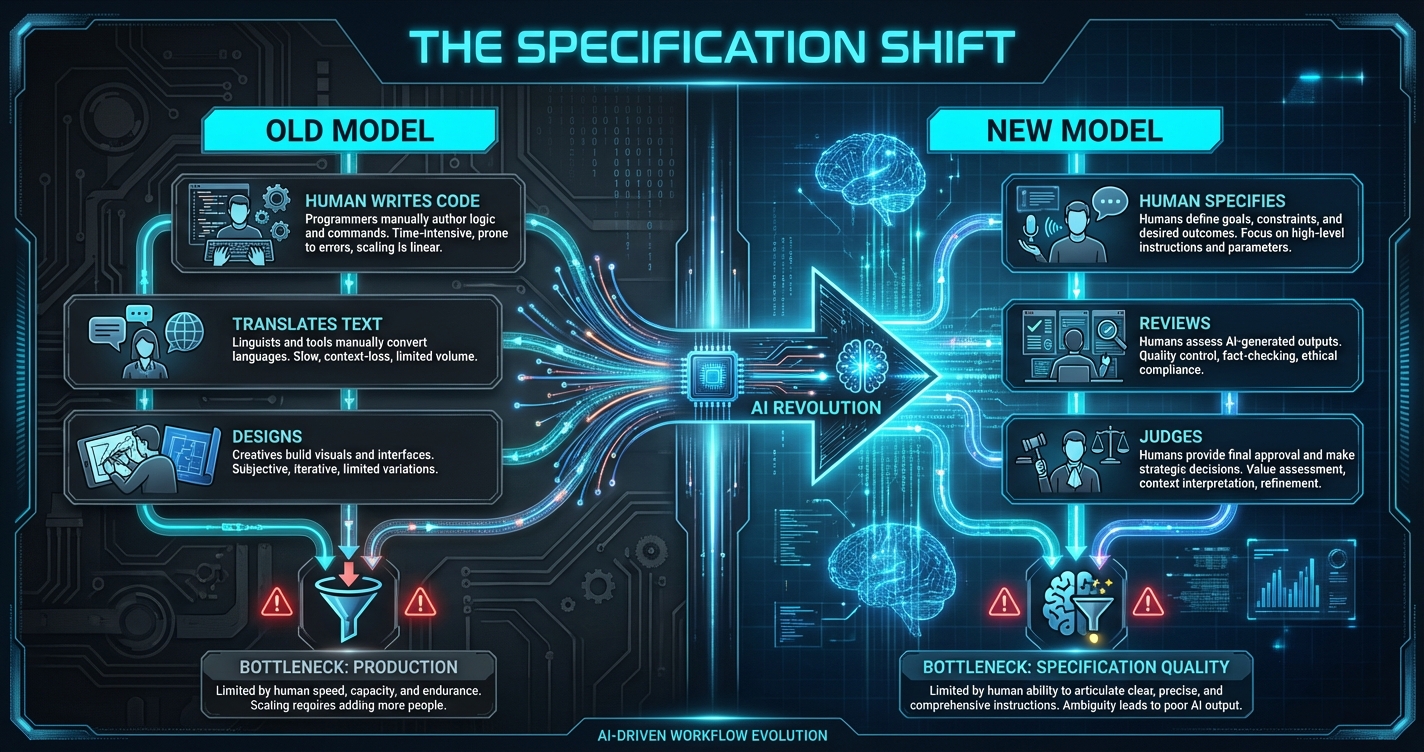

Here's what I discovered building FidelTrad: AI didn't eliminate the need for human translators. It elevated what human judgment looks like.

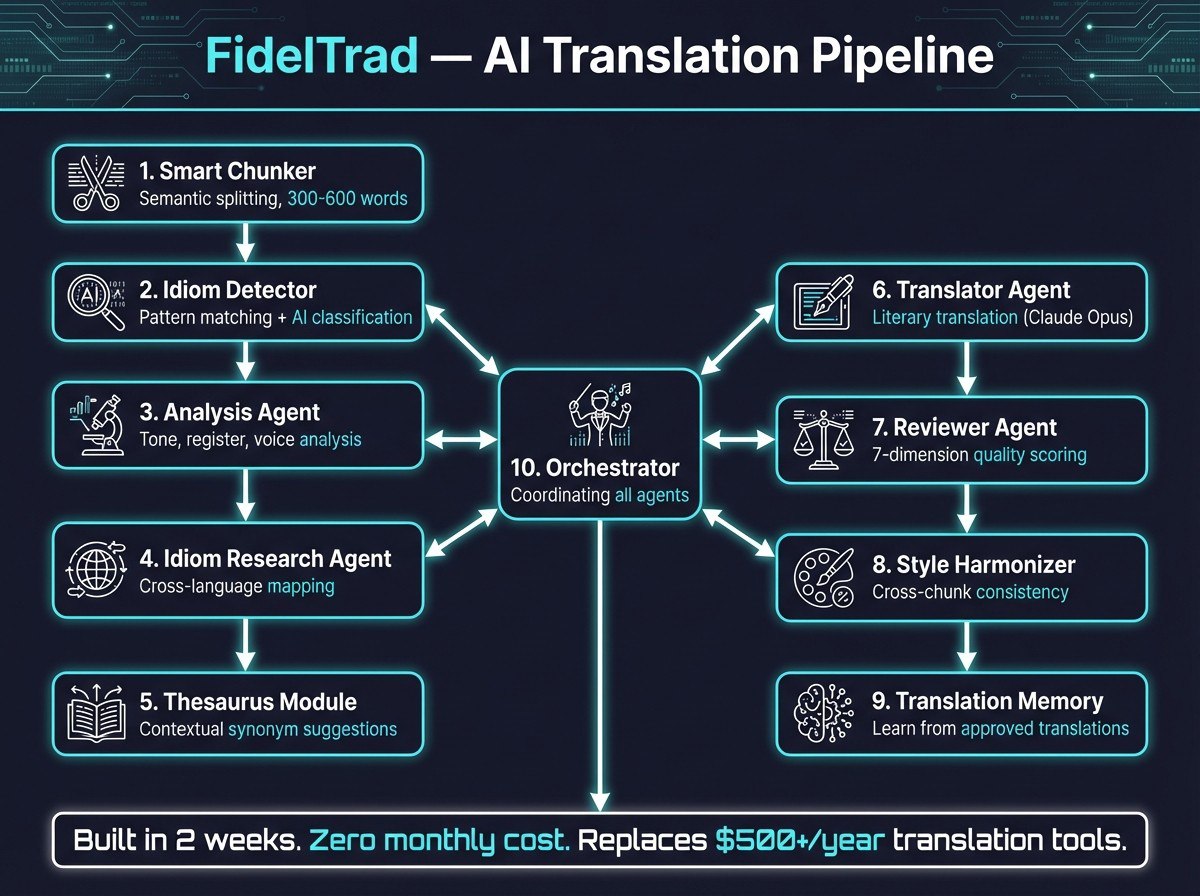

My system runs on Next.js with Claude API integration, orchestrating three distinct AI models—Claude Opus 4.6 for complex literary passages, Sonnet 4 for technical content, and Haiku 4.5 for straightforward text. The architecture handles 32GB of contextual memory on my Pop!_OS workstation, processing entire books while maintaining narrative consistency across chapters.

But here's the kicker: The AI does 90% of the translation work perfectly. The other 10%—the specification, context, and judgment calls—that's where I add all the value.

I don't translate anymore. I specify. I define tone, context, and cultural nuances. I catch when the AI mistranslates a technical term or misses a cultural reference. I architect the multi-agent workflow that determines which model handles which type of content.

Sound familiar? It should. It's exactly what Nate B. Jones describes as the shift from production to specification work.

The Broader Pattern Nate Identified

In his video, Nate points to translation as the canary in the coal mine for all knowledge work. He references François Chollet's framework: when AI achieves near-perfect task capability, human roles transform rather than disappear. The Bureau of Labor Statistics still projects modest growth for translation jobs despite AI reaching human-level translation capability.

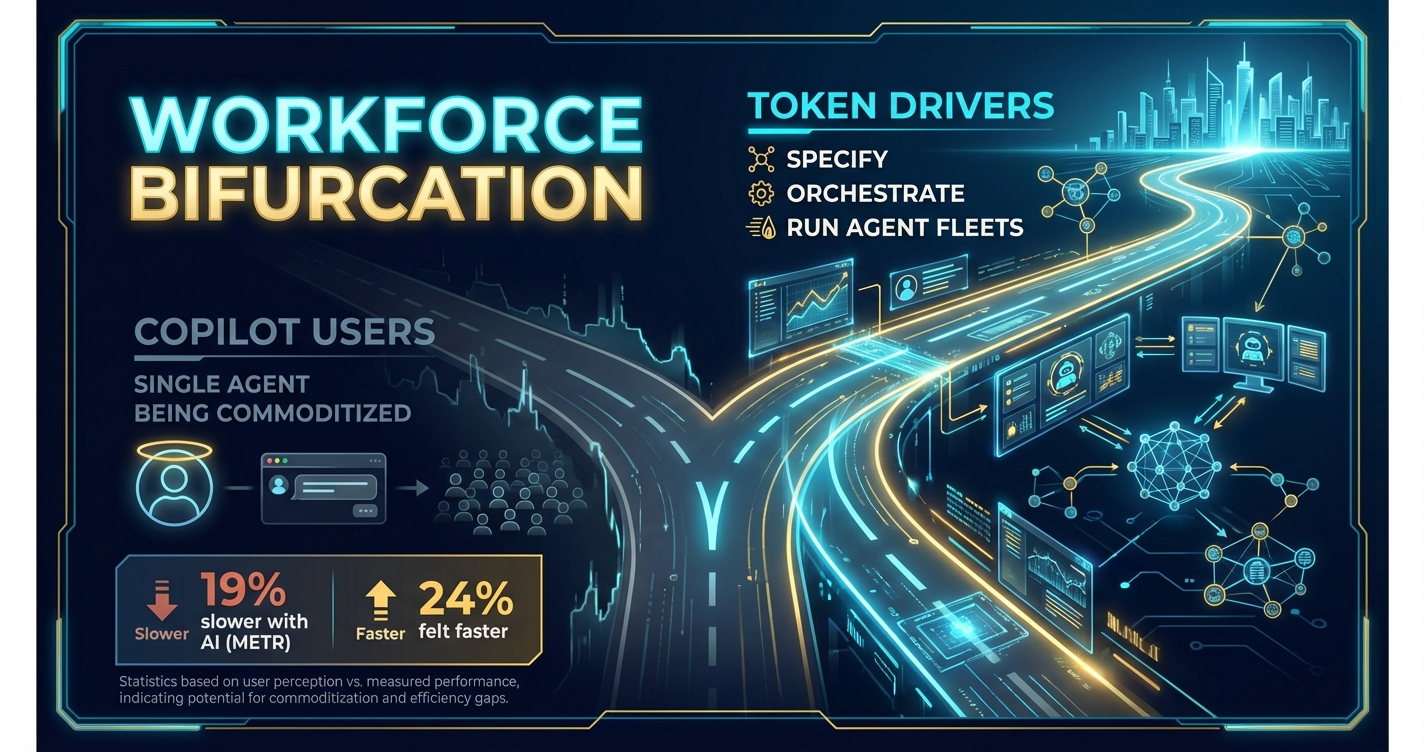

But Nate goes deeper. He identifies two distinct classes emerging across all knowledge work:

High-value "token drivers" who specify precisely, architect systems, and manage agent fleets. They're seeing explosive revenue per employee—companies like Cursor at $16 million per employee, Midjourney at $200 million with just 11 people.

Low-leverage workers using single-agent workflows, getting commoditized as entry-level postings drop by two-thirds and 70% of hiring managers say AI can handle intern-level work.

I Built What Nate Theorizes About

Here's what makes FidelTrad more than just a translation tool—it's proof of Nate's thesis in action:

Multi-agent orchestration: My system runs what Nate calls an "agent fleet." Three Claude models with specialized roles, coordinated by human specification. It's not one AI doing everything; it's multiple AIs doing what they're best at, directed by human judgment.

The token driver model: I went from facing thousands per language and months of waiting to processing a full book translation in weeks for a fraction of the cost. But the real value isn't savings — it's that I can now translate into four languages simultaneously, something that was previously economically impossible for an independent author.

Specification as the scarce resource: The hardest part of building FidelTrad wasn't the technical architecture. It was learning to specify intent precisely enough that AI agents could execute reliably. "Make it sound natural" isn't a specification. "Maintain the author's academic tone while simplifying technical jargon for a business audience" is.

This is the inversion Nate describes: when production costs collapse to zero, specification becomes everything.

The Dark Factory I'm Living In

What keeps me up at night—and gets me out of bed every morning—is realizing I'm already operating what METR's study calls a "dark factory." My translation pipeline runs with minimal human intervention. Three AI agents coordinate, make decisions, and produce output that's often better than what I could create manually.

But I'm not just observing this transformation—I'm orchestrating it. And that's the difference between thriving and surviving in the AI transition.

The data backs this up. Code Rabbit's analysis found AI-generated code produces 1.7x more logic issues than human code. The problem isn't AI capability—it's specification quality. When I write clear specifications for FidelTrad's agents, they execute flawlessly. When I'm vague, they build the wrong thing perfectly.

The J-Curve We're All Riding

Here's where Nate's framework gets personally uncomfortable: we're in the trough of a productivity J-curve right now. Census Bureau research shows AI deployment initially reduces productivity by 1.3 percentage points, with some firms dropping 60 points before recovery.

I lived this. FidelTrad's first month was brutal. The AI made translation errors that human translators never would. I spent more time fixing output than I would have writing from scratch. But once I learned to specify properly—to architect the multi-agent system and define clear success criteria—productivity exploded.

That's the pattern across knowledge work. The METR study found experienced developers are 19% slower with AI tools despite believing they're 24% faster. The disconnect isn't the technology—it's the specification skill gap.

The Skills That Actually Matter

Building FidelTrad taught me that the future isn't about learning to code or mastering specific AI tools. It's about developing what engineers have spent 50 years perfecting: the ability to translate vague human intent into executable specifications.

Think in systems, not documents. FidelTrad isn't a document generator—it's a system with inputs, rules, and validation criteria. Once specified, it runs autonomously and improves with feedback.

Make outputs verifiable. Every FidelTrad translation includes confidence scores, alternative phrasings, and cultural context flags. I can measure quality objectively, not by vibes.

Audit for coordination overhead. Traditional translation workflows involved project managers, reviewers, and coordinators. FidelTrad eliminates that entirely—the system coordinates itself based on specifications I defined once.

These aren't technical skills. They're thinking frameworks that work across any domain where AI is transforming production.

The Choice We All Face

Nate ends his video with a challenge: "The technology is not going to wait for organizations and individuals to catch up. We have to lean in and help each other."

He's right. But I'd go further: The window for choosing which side of the bifurcation you end up on is closing faster than most people realize.

I built FidelTrad not because I'm smarter than anyone else, but because I treated AI as a system to architect rather than a tool to use. That mental shift—from "How can AI help me work faster?" to "How can I specify what AI should build?"—is what separates high-value token drivers from low-leverage workers getting commoditized.

The translation industry figured this out first because AI reached human-level capability there earlier. But Nate's thesis is that all knowledge work is converging on the same substrate: translating vague human intent into precise instructions that human or machine systems can execute.

Whether you're specifying a translation, a software feature, or a business strategy, the cognitive task is identical. And the people who master specification—who can architect agent fleets and validate outputs against intention—they're capturing exponential value.

The rest are getting compressed.

What Are You Building With It?

I didn't set out to prove Nate B. Jones's thesis about the future of work. I just wanted to translate some books. But building FidelTrad taught me that the AI revolution isn't coming—it's here. The only question is whether you're architecting it or being architected by it.

The translation test isn't about translation. It's about whether you can specify intent precisely enough that AI systems can execute it reliably. Whether you can think in systems, validate outputs, and orchestrate multiple agents toward a defined outcome.

If you can pass the translation test—if you can master specification as a core skill—the world is your oyster. If you can't, you're in for a rougher ride than most people expect.

What are you building with it?